The flickering cursor on a bare terminal screen, the hum of servers in the distance – this is where true digital architects are forged. In the shadowed alleys of information technology, the ability to manipulate and control environments without touching physical hardware is not just an advantage; it's a prerequisite for survival. Virtualization, the art of creating digital replicas of physical systems, is the bedrock upon which modern cybersecurity, development, and network engineering stand. Ignoring it is akin to a surgeon refusing to learn anatomy. Today, we dissect the core concepts, the practical applications, and the strategic advantages of mastering virtual machines (VMs), from the ubiquitous Kali Linux and Ubuntu to the proprietary realms of Windows 11 and macOS.

Table of Contents

- You NEED to Learn Virtualization!

- What This Video Covers

- Before Virtualization & Benefits

- Type 2 Hypervisor Demo (VMWare Fusion)

- Multiple OS Instances

- Suspend/Save OS State to Disk

- Windows 11 vs 98 Resource Usage

- Connecting VMs to Each Other

- Running Multiple OSs at Once

- Virtualizing Network Devices (Cisco CSR Router)

- Learning Networking: Physical vs Virtual

- Virtual Machine Snapshots

- Inception: Nested Virtualization

- Benefit of Snapshots

- Type 2 Hypervisor Disadvantages

- Type 1 Hypervisors

- Hosting OSs in the Cloud

- Linode: Try It For Yourself!

- Setting Up a VM in Linode

- SSH into Linode VM

- Cisco Modeling Labs: Simulating Networks

- Which Hypervisor to Use for Windows

- Which Hypervisor to Use for Mac

- Which Hypervisor Do You Use? Leave a Comment!

You NEED to Learn Virtualization!

Whether you're aiming to infiltrate digital fortresses as an ethical hacker, architecting the next generation of software as a developer, engineering resilient networks, or diving deep into artificial intelligence and computer science, virtualization is no longer a niche skill. It's a fundamental pillar of modern Information Technology. Mastering this discipline can fundamentally alter your career trajectory, opening doors to efficiencies and capabilities previously unimaginable. It's not merely about running software; it's about controlling your operating environment with surgical precision.

What This Video Covers

This deep dive is structured to provide a comprehensive understanding, moving from the abstract to the concrete. We'll demystify the core principles, explore the practical benefits, and demonstrate hands-on techniques that you can apply immediately. Expect to see real-world examples, including the setup and management of various operating systems and network devices within virtualized landscapes. By the end of this analysis, you'll possess the foundational knowledge to leverage virtualization strategically in your own work.

Before Virtualization & Benefits

In the analog era of computing, each task demanded its own dedicated piece of hardware. Server rooms were vast, power consumption was astronomical, and resource utilization was often abysmal. Virtualization shattered these constraints. It allows a single physical server to host multiple isolated operating system instances, each behaving as if it were on its own dedicated hardware. This offers:

- Resource Efficiency: Maximize hardware utilization, reducing costs and energy consumption.

- Isolation: Run diverse operating systems and applications on the same hardware without conflicts. Critical for security testing and sandboxing.

- Flexibility & Agility: Quickly deploy, clone, move, and revert entire systems. Essential for rapid development, testing, and disaster recovery.

- Cost Reduction: Less physical hardware means lower capital expenditure, maintenance, and operational costs.

- Testing & Development Labs: Create safe, isolated environments to test new software, configurations, or exploit techniques without risking production systems.

Type 2 Hypervisor Demo (VMWare Fusion)

Type 2 hypervisors, also known as hosted hypervisors, run on top of an existing operating system, much like any other application. Software like VMware Fusion (for macOS) or VMware Workstation/Player and VirtualBox (for Windows/Linux) fall into this category. They are excellent for desktop use, development, and learning.

Consider VMware Fusion. Its interface allows users to create, configure, and manage VMs with relative ease. You can define virtual hardware specifications – CPU cores, RAM allocation, storage size, and network adapters – tailored to the needs of the guest OS. This abstraction layer is key; the hypervisor translates the guest OS’s hardware requests into instructions for the host system’s hardware.

Multiple OS Instances

The true power of Type 2 hypervisors becomes apparent when you realize you can run multiple operating systems concurrently on a single machine. Imagine having Kali Linux running for your penetration testing tasks, Ubuntu for your development environment, and Windows 10 or 11 for specific applications, all accessible simultaneously from your primary macOS or Windows desktop. Each VM operates in its own self-contained environment, preventing interference with the host or other VMs.

Suspend/Save OS State to Disk

One of the most invaluable features of virtualization is the ability to suspend a VM. Unlike simply shutting down, suspending saves the *entire state* of the operating system – all running applications, memory contents, and current user sessions – to disk. This allows you to power down your host machine or close your laptop, and upon resuming, instantly return to the exact state the VM was in. This is a game-changer for workflow continuity, especially when dealing with complex setups or time-sensitive tasks.

Windows 11 vs 98 Resource Usage

The evolution of operating systems is starkly illustrated when comparing resource demands. Running a modern OS like Windows 11 within a VM requires significantly more RAM and CPU power than legacy systems like Windows 98. While Windows 98 could arguably run on a potato, Windows 11 needs a respectable allocation of host resources to perform adequately. This highlights the importance of proper resource management and understanding the baseline requirements for each guest OS when planning your virtualized infrastructure. Allocating too little can lead to sluggish performance, while over-allocating can starve your host system.

Connecting VMs to Each Other

For network engineers and security analysts, the ability to connect VMs is paramount. Hypervisors offer various networking modes:

- NAT (Network Address Translation): The VM shares the host’s IP address. It can access external networks, but external devices cannot directly initiate connections to the VM.

- Bridged Networking: The VM gets its own IP address on the host’s physical network, appearing as a distinct device.

- Host-only Networking: Creates a private network between the host and its VMs, isolating them from external networks.

By configuring these modes, you can build complex virtual networks, simulating enterprise environments or setting up isolated labs for malware analysis or exploitation practice.

Running Multiple OSs at Once

The ability to run multiple operating systems simultaneously is the essence of multitasking on a grand scale. A security professional might run Kali Linux for network scanning on one VM, a Windows VM with specific forensic tools for analysis, and perhaps a Linux server VM to host a custom C2 framework. Each VM is an independent entity, allowing for rapid switching and parallel execution of tasks. The host machine’s resources (CPU, RAM, storage I/O) become the limiting factor, dictating how many VMs can operate efficiently at any given time.

Virtualizing Network Devices (Cisco CSR Router)

Virtualization extends beyond traditional operating systems. Network Function Virtualization (NFV) allows us to run network appliances as software. For instance, Cisco’s Cloud Services Router (CSR) 1000v can be deployed as a VM. This enables network engineers to build and test complex routing and switching configurations, simulate WAN links, and experiment with network security policies within a virtual lab environment before implementing them on physical hardware. Tools like GNS3 or Cisco Modeling Labs (CML) build upon this, allowing for the simulation of entire network topologies.

Learning Networking: Physical vs Virtual

Learning networking concepts traditionally involved expensive physical hardware. Virtualization democratizes this. You can spin up virtual routers, switches, and firewalls within your hypervisor, connect them, and experiment with protocols like OSPF, BGP, VLANs, and ACLs. This not only drastically reduces the cost of learning but also allows for experimentation with configurations that might be risky or impossible on live production networks. You can simulate network failures, test failover mechanisms, and practice incident response scenarios with unparalleled ease and safety.

Virtual Machine Snapshots

Snapshots are point-in-time captures of a VM's state, including its disk, memory, and configuration. Think of them as save points in a video game. Before making significant changes – installing new software, applying critical patches, or attempting a risky exploit – taking a snapshot allows you to revert the VM to its previous state if something goes wrong. This is an indispensable feature for any serious testing or development work.

Inception: Nested Virtualization

Nested virtualization refers to running a hypervisor *inside* a virtual machine. For example, running VMware Workstation or VirtualBox within a Windows VM that itself is running on a physical machine. This capability is crucial for scenarios like testing hypervisor software, developing virtualization management tools, or creating complex virtual lab environments where multiple layers of virtualization are required. While it demands significant host resources, it unlocks advanced testing and demonstration capabilities.

Benefit of Snapshots

The primary benefit of snapshots is **risk mitigation and workflow efficiency**. Security researchers can test exploits on a clean VM snapshot, revert if detected or if the exploit fails, and try again without a lengthy rebuild. Developers can test software installations and configurations, reverting to a known good state if issues arise. For network simulations, snapshots allow quick recovery after experimental configuration changes that might break the simulated network. It transforms risky experimentation into a predictable, iterative process.

Type 2 Hypervisor Disadvantages

While convenient, Type 2 hypervisors are not without their drawbacks, especially in production or high-performance scenarios:

- Performance Overhead: They rely on the host OS, introducing an extra layer of processing, which can lead to slower performance compared to Type 1 hypervisors.

- Security Concerns: A compromise of the host OS can potentially compromise all VMs running on it.

- Resource Contention: The VM competes for resources with the host OS and its applications, leading to unpredictable performance.

For critical server deployments, dedicated cloud environments, or high-density virtualization, Type 1 hypervisors are generally preferred.

Type 1 Hypervisors

Type 1 hypervisors, also known as bare-metal hypervisors, run directly on the physical hardware of the host, without an underlying operating system. Examples include VMware ESXi, Microsoft Hyper-V, and KVM (Kernel-based Virtual Machine) on Linux. They are designed for enterprise-class environments due to their:

- Superior Performance: Direct access to hardware minimizes overhead, offering near-native performance.

- Enhanced Security: Reduced attack surface as there’s no host OS to compromise.

- Scalability: Built to manage numerous VMs efficiently across server clusters.

These are the workhorses of data centers and cloud providers.

Hosting OSs in the Cloud

The concept of virtualization has also moved to the cloud. Cloud providers like Linode, AWS, Google Cloud, and Azure offer virtual machines (often called instances) as a service. You can spin up servers with chosen operating systems, CPU, RAM, and storage configurations on demand, without managing any physical hardware. This is ideal for deploying applications, hosting websites, running complex simulations, or even setting up dedicated pentesting environments accessible from anywhere.

Linode: Try It For Yourself!

For those looking to experiment with cloud-based VMs without a steep learning curve or prohibitive costs, Linode offers a compelling platform. They provide straightforward tools for deploying Linux servers in the cloud. To get started, you can often find promotional credits that allow you to test their services extensively. This is an excellent opportunity to understand cloud infrastructure, deploy Kali Linux for remote access, or host a web server.

Get started with Linode and explore their offerings: Linode Cloud Platform. If that link encounters issues, try this alternative: Linode Alternative Link. Note that these credits typically have an expiration period, often 60 days.

Setting Up a VM in Linode

The process for setting up a VM on Linode is designed for simplicity. After creating an account and securing any available credits, you navigate their dashboard to create a new "Linode Instance." You select your desired operating system image – common choices include various Ubuntu LTS versions, Debian, or even Kali Linux. You then choose a plan based on the CPU, RAM, and storage you require, and select a data center location for optimal latency. Once provisioned, your cloud server is ready to be accessed.

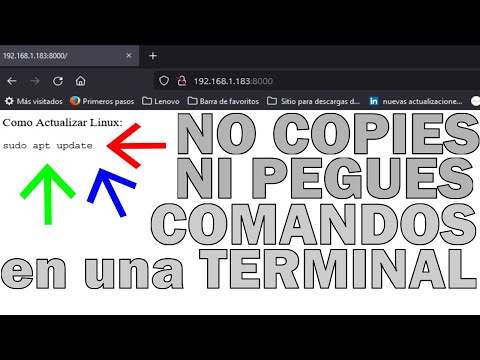

SSH into Linode VM

Secure Shell (SSH) is the standard protocol for remotely accessing and managing Linux servers. Once your Linode VM is provisioned, you'll receive its public IP address and root credentials (or you'll be prompted to set them up). Using an SSH client (like OpenSSH on Linux/macOS, PuTTY on Windows, or the built-in SSH client in Windows Terminal), you can establish a secure connection to your cloud server. This grants you command-line access, allowing you to install software, configure services, and manage your VM as if you were physically present.

Cisco Modeling Labs: Simulating Networks

For in-depth network training and simulation, tools like Cisco Modeling Labs (CML), formerly Cisco VIRL, are invaluable. CML allows you to build sophisticated network topologies using virtualized Cisco network devices. You can deploy virtual routers, switches, firewalls, and even virtual machines running full operating systems within a simulated environment. This is critical for anyone pursuing Cisco certifications like CCNA or CCNP, or for network architects designing complex enterprise networks. It provides a realistic sandboxed environment to test configurations, protocols, and network behaviors.

Which Hypervisor to Use for Windows

For Windows users, several robust virtualization options exist:

- VMware Workstation Pro/Player: Mature, feature-rich, and widely adopted. Workstation Pro offers advanced features for professionals, while Player is a capable free option for basic use.

- Oracle VM VirtualBox: A popular, free, and open-source hypervisor that runs on Windows, Linux, and macOS. It's versatile and performs well for most desktop virtualization needs.

- Microsoft Hyper-V: Built directly into Windows Pro and Enterprise editions. It’s a Type 1 hypervisor, often providing excellent performance for Windows guests.

Your choice often depends on your specific needs, budget, and whether you require advanced features like complex networking or snapshot management.

Which Hypervisor to Use for Mac

Mac users have distinct, high-quality choices:

- VMware Fusion: A direct competitor to VirtualBox for macOS, offering a polished user experience and strong performance, especially with Intel-based Macs.

- Parallels Desktop: Known for its seamless integration with macOS and excellent performance, particularly for running Windows on Mac. It often excels in graphics-intensive applications and gaming within VMs.

- Oracle VM VirtualBox: Also available for macOS, offering a free and open-source alternative with solid functionality.

Apple's transition to Apple Silicon (M1, M2, etc.) has introduced complexities, with some hypervisors (like Parallels and the latest Fusion versions) focusing on ARM-based VMs, predominantly Linux and Windows for ARM.

Which Hypervisor Do You Use? Leave a Comment!

The landscape of virtualization is constantly evolving. Each hypervisor has its strengths and weaknesses, and the "best" choice is heavily dependent on your specific use case, operating system, and technical requirements. Whether you're spinning up Kali Linux VMs for security audits, testing development builds on Ubuntu, or simulating complex network scenarios with Cisco devices, understanding the underlying principles of virtualization is key. What are your go-to virtualization tools? What challenges have you faced, and what innovative solutions have you implemented? Drop your thoughts, configurations, and battle scars in the comments below. Let's build a more resilient digital future, one VM at a time.

Arsenal of the Operator/Analista

- Hypervisors: VMware Workstation Pro, Oracle VM VirtualBox, VMware Fusion, Parallels Desktop, KVM, XenServer.

- Cloud Platforms: Linode, AWS EC2, Google Compute Engine, Azure Virtual Machines.

- Network Simulators: Cisco Modeling Labs (CML), GNS3, EVE-NG.

- Tools: SSH clients (OpenSSH, PuTTY), Wireshark (for VM network traffic analysis).

- Books: "Mastering VMware vSphere" series (for enterprise), "The Practice of Network Security Monitoring" (for threat hunting within VMs).

- Certifications: VMware Certified Professional (VCP), Cisco certifications (CCNA, CCNP) requiring network simulation.

Veredicto del Ingeniero: ¿Vale la pena adoptarlo?

Virtualization is not an option; it's a strategic imperative. For anyone operating in IT, from the aspiring ethical hacker to the seasoned cloud architect, proficiency in virtualization is non-negotiable. Type 2 hypervisors offer unparalleled flexibility for desktop use, research, and learning, while Type 1 hypervisors and cloud platforms provide the scalability and performance required for production environments. The ability to create, manage, and leverage isolated environments underpins modern security practices, agile development, and efficient network operations. Failing to adopt and master virtualization is a direct path to obsolescence in this field.

Frequently Asked Questions

- What is the difference between Type 1 and Type 2 hypervisors?

- Type 1 hypervisors run directly on hardware (bare-metal), offering better performance and security. Type 2 hypervisors run as applications on top of an existing OS (hosted).

- Can I run Kali Linux in a VM?

- Absolutely. Kali Linux is designed to be run in various environments, including VMs, making it ideal for security testing and practice.

- How does virtualization impact security?

- Virtualization enhances security through isolation, allowing for safe sandboxing and testing of potentially malicious software. However, misconfigurations or compromises of the host can pose risks.

- Is cloud virtualization the same as local VM virtualization?

- Both use virtualization principles, but cloud virtualization abstracts hardware management, offering scalability and accessibility as a service.

- What are snapshots used for?

- Snapshots capture the state of a VM, allowing you to revert to a previous point in time. This is crucial for safe testing, development, and recovery.

El Contrato: Fortalece tu Laboratorio Digital

Your mission, should you choose to accept it, is to establish a secure and functional virtual lab. Select one of the discussed hypervisors (VirtualBox, VMware Player, or Fusion, depending on your host OS). Then, deploy a second operating system – perhaps Ubuntu Server for a basic web server setup, or Kali Linux for practicing network scanning against your own local network (ensure you have explicit permission for any targets!). Document your setup process, including resource allocation (RAM, CPU, disk space) and network configuration. Take at least three distinct snapshots at critical stages: before installing the OS guest additions/tools, after installing a web server, and after configuring a basic firewall rule.

This hands-on exercise will solidify your understanding of VM management, resource allocation, and the critical role of snapshots. Report back with your findings and any unexpected challenges encountered. The digital frontier awaits your command.