The digital ether hums with promises of unfettered communication, a veritable ghost in the machine for free speech. Yet, when the earth trembles and the waves surge, these digital promises can shatter, leaving behind only silence. Today, we dissect an incident where the very platform championing free expression choked the flow of life-saving intelligence. A critical delay, orchestrated by API rate limits, turned a supposed tool of salvation into a bottleneck of despair. This is not just about Twitter; it's about the inherent fragility of our networked dependencies.

The Paradox of the Birdcage: Free Speech Under Lock and Key

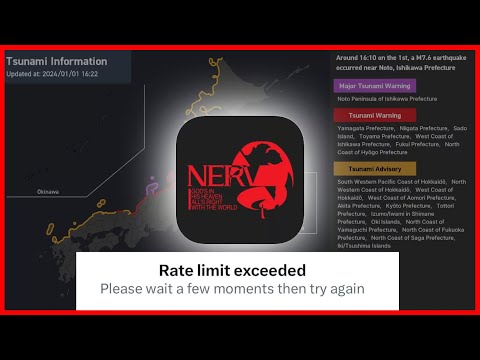

Elon Musk's bold $45 billion acquisition of Twitter was heralded with a clarion call for an unvarnished digital public square. The promise: an unwavering commitment to free speech. Reality, however, tends to paint a grimmer picture. The crackdown on parody accounts and the imposition of stringent limitations painted a stark contradiction to this proclaimed ethos. The incident involving Nerve, Japan's government-backed disaster prevention program, throws this paradox into sharp relief. When a platform designed to amplify voices becomes a barrier during a crisis, its core tenets are called into question. Is this true free speech, or a carefully curated echo chamber dictated by backend limitations?

Nerve's Digital Shackles: When Rate Limits Become Disasters

Nerve wasn't just another social media account; it was a vital cog in Japan's emergency response machinery. Tasked with disseminating critical alerts during earthquakes and tsunamis, its reliance on Twitter's API became a critical vulnerability. The imposed rate limits, throttling the very speed required for timely warnings, transformed a lifeline into a digital noose. This isn't a hypothetical scenario; it's a stark demonstration of the precariousness of entrusting life-saving services to proprietary platforms. The incident exposes the inherent risks when critical infrastructure is built upon non-free software, subject to the whims and commercial imperatives of a private entity.

The Price of Silence: Financial Realities of Crisis Communication

As a government initiative, Nerve operates under the harsh lens of public funding. The staggering $5,000 per month required for a premium API plan presents a stark financial hurdle. This isn't a minor operational cost; it's a significant drain on resources that could otherwise be allocated to direct aid or infrastructure improvement. The incident highlights a fundamental tension: the growing commercialization of essential digital services and the potential for these costs to become prohibitive for public good initiatives. How can we ensure vital information flows freely when the channels themselves come with a hefty, ongoing price tag?

Twitter as the Oracle: A Double-Edged Sword in the Digital Dark

Despite the controversies and the critical failure during the Nerve incident, Twitter's reach remains undeniable. It serves as a global broadcast system, an immediate pulse for breaking news and unfolding events. For initiatives like Nerve, its immediacy and widespread adoption are unparalleled. However, this dependence breeds a dangerous myopia. Relying solely on a private platform for public safety infrastructure is akin to building an emergency shelter on shifting sands. The Nerve incident is a loud, clear warning bell, demanding a reassessment of our digital dependencies and the potential consequences when private interests intersect with public welfare.

Musk's Intervention: The Ghost in the Machine Responds

Amidst the escalating crisis, the digital world watched as pleas for intervention reached the platform's new proprietor. The issue, stubbornly persistent despite Nerve's premium subscription, only found resolution when Twitter employees bypassed standard protocols to alert Elon Musk directly. While the problem was eventually patched, the delay was critical. It underscored a terrifying reality: the fate of life-saving communications can hinge on the personal intervention of a single executive. This isn't a robust system; it's a precarious house of cards, susceptible to the whims and attention spans of its overlords. The incident serves as a stark reminder that our capacity for emergency response can be held hostage by the internal workings of a private corporation.

Lessons from the Brink: Rebuilding Resilient Communication Networks

The resolution of the Nerve issue, though eventually achieved, was marred by an unacceptable delay. This incident provides a harsh, yet invaluable, education for all entities that rely on digital platforms for essential services. It screams for the implementation of robust contingency plans, a deep understanding of potential vulnerabilities, and a healthy skepticism towards proprietary solutions for critical infrastructure. We must move beyond simply reacting to crises and proactively build systems that are resilient, redundant, and insulated from the arbitrary limitations of third-party services.

Veredicto del Ingeniero: The Fragility of Centralized Digital Lifeblood

Twitter's rate limiting on Nerve wasn't just a technical glitch; it was a symptom of a deeper illness. Our increasing reliance on a handful of centralized, non-free platforms for critical functions – from emergency alerts to financial transactions – creates systemic vulnerabilities. While these platforms offer convenience and reach, they inherently lack the transparency, control, and guaranteed uptime required for true public safety. The Nerve incident demonstrates that when the backend rules change, or when financial pressures dictate a new policy, life-saving communication can grind to a halt. The trade-off for "free" speech on these platforms often comes at the cost of guaranteed access during our most desperate hours.

Arsenal del Operador/Analista

- Open-Source Intelligence (OSINT) Tools: For monitoring diverse information channels without API dependency.

- Decentralized Communication Platforms: Exploring alternatives like Mastodon or Signal for resilient messaging.

- Disaster Response Simulation Software: Tools for training and testing emergency protocols independent of third-party platforms.

- Technical Books: "The Art of Invisibility" by Kevin Mitnick, "Ghost in the Wires" by Kevin Mitnick, "Countdown to Zero Day" by Kim Zetter.

- Certifications: Certified Incident Responder (ECIH), Certified Ethical Hacker (CEH).

Taller Defensivo: Fortaleciendo la Red de Alertas de Emergencia

- Análisis de Vulnerabilidades de Plataformas Actuales:

- Identificar qué plataformas de comunicación (ej. Twitter, Facebook, SMS gateways) tienen políticas de límites de API o de uso restrictivas.

- Evaluar los costos asociados a planes premium o API dedicadas para asegurar un flujo de datos continuo y sin restricciones.

- Investigar las cláusulas de servicio de cada plataforma para entender las condiciones y posibles interrupciones.

- Desarrollo de un Plan de Comunicación de Contingencia:

- Diseñar un plan que incorpore múltiples canales de comunicación (ej. SMS, email, radio de emergencia, aplicaciones móviles independientes, sitios web dedicados).

- Establecer protocolos claros para la activación de cada canal en función del tipo y la severidad de la emergencia.

- Implementar sistemas de notificación push para aplicaciones móviles diseñadas específicamente para alertas de emergencia, minimizando la dependencia de APIs externas.

- Exploración y Adopción de Alternativas Open-Source y Descentralizadas:

- Evaluar plataformas de mensajería descentralizada que no dependan de servidores centralizados y sus políticas de API (ej. Matrix, Mastodon).

- Investigar el uso de protocolos de radio de emergencia o redes comunitarias que puedan operar de forma independiente.

- Desarrollar o adaptar soluciones de código abierto para la diseminación de alertas, asegurando la propiedad total del código y la infraestructura.

- Pruebas y Simulacros Periódicos:

- Realizar simulacros regulares de todo el sistema de comunicación de emergencia para identificar fallos y cuellos de botella.

- Validar la efectividad de los canales de contingencia y la velocidad de respuesta en escenarios simulados.

- Asegurar que todo el personal relevante esté capacitado en el uso de los diferentes canales y protocolos de comunicación.

Preguntas Frecuentes

1. ¿Twitter es la única plataforma enfrentando críticas por contradecir promesas de libre expresión?

No, si bien Twitter ha sido objeto de un escrutinio particular, otras plataformas también han sido criticadas por contradicciones similares, lo que subraya los desafíos inherentes a la aplicación de la verdadera libertad de expresión en línea.

2. ¿Cómo intervino Elon Musk para resolver el problema de los límites de la API de Nerve?

La intervención de Elon Musk condujo a una comunicación directa con empleados de Twitter, lo que finalmente resolvió las limitaciones de la API y permitió a Nerve transmitir sus alertas en tiempo real.

3. ¿Qué pasos pueden tomar los gobiernos para evitar tales retrasos en servicios críticos?

Los gobiernos deberían considerar la diversificación de plataformas, invertir en infraestructura robusta y negociar términos que garanticen una comunicación rápida durante emergencias.

4. ¿Existen soluciones alternativas al uso de software no libre para servicios críticos?

Sí, la exploración de alternativas de código abierto y la inversión en soluciones personalizadas pueden ofrecer un mayor control y flexibilidad en situaciones críticas.

5. ¿Qué lecciones pueden aprender las empresas de la experiencia de Nerve?

Las empresas deben priorizar la planificación de contingencias, sopesar las implicaciones financieras de los planes premium y estar atentas a posibles limitaciones al depender de plataformas de terceros para servicios cruciales.

El Contrato: Forjando Resiliencia Digital

La red es un arma de doble filo. Promete conexión global instantánea pero es tan fuerte como su eslabón más débil. El incidente de Nerve y los límites de la API de Twitter no es solo un fallo técnico; es un llamado a la acción. La dependencia de plataformas privadas para la infraestructura crítica de seguridad pública es una apuesta demasiado arriesgada. Tu contrato es simple: no confíes tu seguridad a la buena voluntad de un algoritmo o a la intervención de un CEO. Identifica hoy mismo los puntos de fallo en tus propias cadenas de comunicación. ¿Qué sucede si tu canal principal es silenciado? ¿Tienes un plan B, C, y D? Demuestra tu compromiso con la resiliencia: diseña y documenta una estrategia de comunicación de contingencia detallada para un servicio crítico que te importe. Comparte los principios clave de tu plan en los comentarios.