The digital realm is a landscape rife with whispers of forgotten code and phantom threats. Among these specters, the legend of Polybius stands out – a tale woven from urban myth and a chilling narrative of technological overreach. But what lies beneath the sensationalism? As security professionals, our task isn't to chase ghosts, but to dissect their anatomy, understand their potential impact, and build impregnable defenses against them. This is not a dive into a video game's lore; it's an analysis of a potential information warfare artifact and its implications from a blue team perspective.

In the early 1980s, the nascent arcade scene was a hub of social interaction and technological fascination. It was a time before widespread internet connectivity, when physical spaces often housed the cutting edge of digital entertainment. Portland, Oregon, became the alleged epicenter of a bizarre phenomenon surrounding a game that seemingly materialized overnight: Polybius. Reports painted a disturbing picture: gamers experiencing debilitating migraines, cardiac distress, seizures, and strokes. Amnesia and hallucinations were also among the reported side effects, creating an atmosphere of fear and intrigue.

The game itself was described as highly addictive, a potent cocktail of engagement that, paradoxically, brewed aggression. Fights erupted, and the narrative culminated in a grim statistic: a player allegedly stabbed to death, with the violence inextricably linked to those who succumbed to Polybius's pull. Such a dangerous, yet captivating, entity begged the question: why would such a game be publicly accessible? The answer, according to the legend, was chillingly simple: the government, or elements within it, were the architects.

Table of Contents

- The Phantom Arcade and the Genesis of Fear

- Deconstructing the Legend: Potential Mechanisms of Harm

- Polybius as a Metaphor for Modern Threats

- Fortifying the Digital Perimeter: Lessons from the Phantom

- Arsenal of the Defender

- Frequently Asked Questions

- The Contract: Securing the System Against Psychological Warfare

The Phantom Arcade and the Genesis of Fear

The Polybius legend is a prime example of how technology can be imbued with fear and suspicion, especially when its origins are obscured. Set in 1981, the narrative places the game within the context of early fears surrounding video games' influence on youth. The reported symptoms – neurological distress, psychological disturbances, and heightened aggression – are potent narrative devices that tap into societal anxieties about the unknown effects of emerging technologies. From a security standpoint, the core of this legend isn't the accuracy of the symptoms, but the *perception* of a threat that can incapacitate individuals through a digital interface.

The narrative explicitly states the game was "highly addictive." This is a critical component. Addictive mechanisms in digital interfaces are a well-studied area, often employed to maximize user engagement. However, when coupled with unsubstantiated claims of severe physical and psychological harm, addiction becomes a vector for a perceived existential threat. The escalation to violence, culminating in a death, transforms the game from a mere entertainment product into a weapon, albeit an allegorical one.

"The line between entertainment and weaponization is as thin as a corrupted data packet."

Deconstructing the Legend: Potential Mechanisms of Harm

While Polybius itself is likely a myth, the *concept* of a digital entity designed to harm is not. Let's deconstruct the alleged mechanisms of harm from a technical and psychological perspective, treating the legend as a case study in potential adversarial influence:

- Subliminal Messaging & Sensory Overload: Early arcade games often pushed the boundaries of visual and auditory design. The legend suggests Polybius might have employed rapid flashing lights, disorienting patterns, and discordant sounds. Technologically, this could be achieved through specific frequencies, pulsating light patterns (stroboscopic effects), or rapid visual shifts designed to induce neurological stress. In modern terms, this echoes concerns about malicious firmware or software exploiting neurological vulnerabilities.

- Behavioral Manipulation: The "addictive" nature and "hyper-aggression" could be attributed to carefully crafted reward loops, variable reinforcement schedules, and psychological triggers embedded within the game's design. These techniques, while common in game design for engagement, could be weaponized to induce specific behavioral outcomes. Think of exploit kits that target human psychology through social engineering, or ransomware designed to create urgent, panic-driven decisions.

- Data Collection & Exploitation: The most plausible, though still speculative, government connection points towards data collection. Was Polybius a front for psychological profiling, surveillance, or even testing the efficacy of psychological warfare techniques? Early 'games' that were more akin to psychological experiments could have been used to gauge reactions to stimuli, collect biometric data (if advanced sensors were feasible then), or assess susceptibility to manipulation.

- Information Warfare Vector: If Polybius was indeed a government-created tool, its purpose could have been to test public susceptibility to psychological manipulation, gather intelligence on public reactions to stimuli, or even sow discord. This aligns with modern concepts of cognitive warfare, where the minds of a population become the battlefield.

The key takeaway here for defenders is that a "threat" doesn't always manifest as a traditional virus or malware. It can exploit human psychology, neurological sensitivities, or simply sow confusion and fear through narrative and engineered perception.

Polybius as a Metaphor for Modern Threats

The Polybius narrative, though rooted in a bygone era, serves as a potent metaphor for contemporary threats in cybersecurity and information operations:

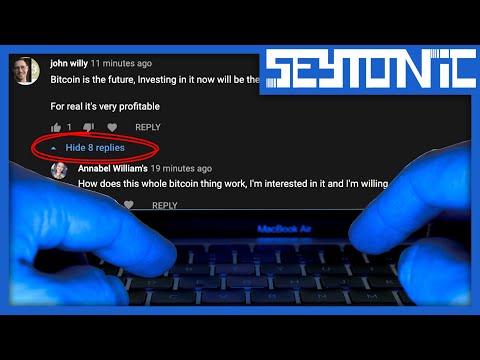

- Disinformation Campaigns: Just as the legend of Polybius spread rapidly through word of mouth, modern disinformation campaigns can be orchestrated online, shaping public perception and eroding trust in institutions or technologies without direct physical interaction. Botnets, deepfakes, and coordinated social media manipulation are the modern-day equivalents of whispered rumors in a dark arcade.

- Exploitation of Human Psychology: Phishing, social engineering, and manipulative advertising all leverage psychological vulnerabilities. The Polybius legend highlights how a seemingly innocuous interface can be twisted to psychological ends, a tactic still very much in play today.

- Advanced Persistent Threats (APTs) with Psychological Components: While APTs are primarily focused on data exfiltration or system disruption, some state-sponsored operations increasingly incorporate psychological warfare to demoralize targets, spread misinformation, or influence public opinion. The "game" in this context is often the manipulation of information ecosystems.

- Sensory and Neurological Attack Vectors: While still nascent, research into how digital stimuli might affect the human brain continues. Concepts like "adversarial audio" or "visual attacks" that exploit perception are areas of active research and potential future threats.

The legend of Polybius is a cautionary tale about the unknown impacts of technology, a theme that remains acutely relevant in our hyper-connected world. It reminds us that our defenses must extend beyond mere code and firewalls to encompass the human element – our perceptions, our psychological vulnerabilities, and our susceptibility to manipulation.

Fortifying the Digital Perimeter: Lessons from the Phantom

While we can't block a mythical arcade game, the principles derived from its legend inform our defensive posture:

- Information Hygiene: Be critical of sensationalized narratives, especially those concerning technology. Verify sources and understand that urban legends often mask real, but more mundane, technological vulnerabilities or societal fears.

- Digital Well-being: Just as players in the Polybius myth suffered physical and psychological distress, excessive or unmoderated engagement with digital content can have negative impacts. Promote healthy digital habits and awareness of potential cognitive load from relentless notifications or overwhelming information streams.

- Cognitive Security: Train individuals to recognize psychological manipulation tactics, whether in phishing emails, propaganda, or even subtly designed user interfaces. Understanding how our own minds can be exploited is a critical layer of defense.

- Secure Design Principles in Software & Hardware: If Polybius were real, its underlying code and hardware would be the prime targets for analysis. This reinforces the importance of secure coding practices, rigorous hardware security audits, and transparency in digital product development. Understanding the "attack surface" of any digital system, including its potential psychological impact, is paramount.

- Threat Intelligence and Myth-Busting: Actively monitoring and analyzing emerging threats, including online narratives and psychological operations, is crucial. The ability to distinguish between a genuine threat and a myth is a core competency for any security professional.

Arsenal of the Defender

To combat contemporary threats that echo the narrative of Polybius, defenders rely on a diversified arsenal:

- Threat Intelligence Platforms (TIPs): Tools that aggregate and analyze threat data from various sources, helping to identify coordinated disinformation campaigns or emerging psychological warfare tactics.

- Behavioral Analytics Tools: Systems that monitor user and system behavior for anomalies, detecting deviations that could indicate compromise or manipulation.

- Psychological Profiling & Social Engineering Awareness Training: Educational programs designed to equip individuals with the cognitive tools to identify and resist manipulative tactics.

- Content Verification & Fact-Checking Tools: Software and services that assist in verifying the authenticity and accuracy of digital information.

- Auditing and Code Review Frameworks: Methodologies and tools for scrutinizing software and hardware to identify vulnerabilities that could be exploited for harmful purposes, whether direct code exploits or indirect psychological ones.

Frequently Asked Questions

Q1: Was Polybius a real game?

While the legend is compelling, there is no concrete evidence to support the existence of an arcade game named Polybius that caused the reported widespread harm. It is widely considered an urban legend, possibly inspired by genuine concerns or isolated incidents. However, the narrative serves as a potent allegory for technological fears.

Q2: Could a video game cause physical harm like seizures or strokes?

Historically, certain light patterns in video games have been known to trigger seizures in individuals with photosensitive epilepsy. This is a recognized medical phenomenon. However, attributing strokes or widespread cardiac arrest directly to gameplay is not scientifically substantiated and falls into the realm of legend or extreme pseudoscience.

Q3: What are the modern equivalents of 'psychological warfare' in cybersecurity?

Modern equivalents include disinformation campaigns, sophisticated social engineering, propaganda disseminated through digital channels, and potentially the exploitation of cognitive biases to influence decision-making during security incidents (e.g., panic-driven actions during a ransomware attack).

The Contract: Securing the System Against Psychological Warfare

The legend of Polybius, while a ghost story from the digital past, offers a stark reminder: the most dangerous attacks often exploit the human element. Whether it's a mythical arcade game or a modern disinformation campaign, the objective can be the same – to destabilize, to manipulate, and to incapacitate through psychological rather than purely technical means. Our role as defenders is to build resilience not just in code, but in cognition. We must be vigilant against threats that operate in the shadows of perception, understanding that the 'attack surface' extends far beyond the network perimeter into the very minds of the users we protect.

The Contract: Fortify Your Cognitive Defenses

Your mission, should you choose to accept it, is to analyze a recent online narrative or news story that has evoked strong emotional reactions. Identify its potential psychological manipulation vectors. How could this narrative be used to disrupt a team's productivity, sow distrust within an organization, or influence critical decision-making? Document your findings, focusing on the *how* and *why* of the manipulation, and share your thoughts on potential counter-narratives or awareness training. The digital battlefield is as much psychological as it is technical. Prove your understanding.