"The network is a battlefield, and malware is the enemy's advanced weapon. Understanding its anatomy is not about replicating destruction, but about building impenetrable defenses."

The digital shadows teem with unseen threats, whispers of code designed to disrupt, steal, or destroy. Malware, a malignant outgrowth of malicious intent, is the silent assassin in this perpetual cyber war. For the defender, the analyst, the hunter, understanding malware isn't an academic exercise; it's a matter of survival. We don't need to run malware to understand it; we need to dissect it, expose its inner workings, and learn to recognize its footprint before it breaches the perimeter. This is not about creating more sophisticated attacks, but about forging more resilient defenses. This is the art and science of malware analysis from the blue team's perspective.

The Anatomy of a Threat: Deconstructing Malware

Malware isn't a monolithic entity; it's a diverse ecosystem of malicious software, each with its own modus operandi. Understanding these classifications is the first step in developing targeted countermeasures.Malware Types: A Categorical Breakdown

- Viruses: Self-replicating code that attaches itself to legitimate programs. Their primary goal is to spread and infect other systems.

- Worms: Standalone malware that replicates itself to spread to other computers, often exploiting network vulnerabilities without human intervention.

- Trojans: Disguised as legitimate software, Trojans trick users into installing them. Once inside, they can perform a variety of malicious actions, from data theft to providing backdoor access.

- Ransomware: Encrypts a victim's files, demanding a ransom payment for the decryption key. This is a direct financial assault on individuals and organizations.

- Spyware: Secretly monitors user activity, collecting sensitive information like login credentials, browsing habits, and financial data.

- Adware: Displays unwanted advertisements, often aggressively, and can sometimes lead to the installation of more malicious software.

- Rootkits: Designed to gain unauthorized access to a computer and hide its presence, making it extremely difficult to detect and remove.

- Bots/Botnets: Infected computers controlled remotely by an attacker, often used to launch distributed denial-of-service (DDoS) attacks or send spam in massive volumes.

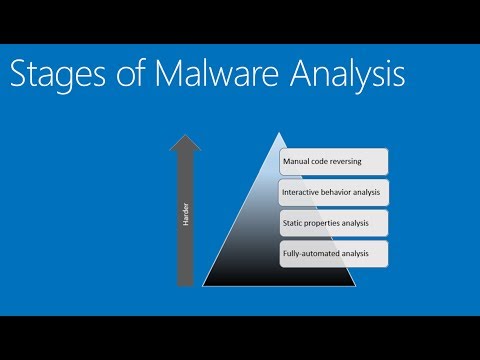

Malware Analysis Techniques: The Analyst's Arsenal

To defend against these digital phantoms, we must learn their language, their methods, their weaknesses. Malware analysis is the process of deconstructing malicious code to understand its functionality, origin, and potential impact. This requires a methodical approach, utilizing a suite of tools and techniques.Static Analysis: Reading the Blueprint

Static analysis involves examining malware without executing it. It's like studying a criminal's plans without them ever leaving their hideout.- File Hashing: Calculating cryptographic hashes (MD5, SHA-1, SHA-256) of the malware sample. This unique fingerprint allows for identification and tracking across threat intelligence feeds. Tools like `md5sum` or `sha256sum` are fundamental.

- String Analysis: Extracting readable strings from the binary. These can reveal file paths, URLs, IP addresses, registry keys, function names, or error messages that hint at the malware's behavior. Tools like `strings` are invaluable here.

- Disassembly: Converting machine code into assembly language. This provides a low-level view of the program's logic, allowing analysts to understand instructions, control flow, and API calls. IDA Pro, Ghidra, and radare2 are industry standards.

- Decompilation: Attempting to reconstruct higher-level source code (like C or C++) from machine code. While not always perfect, it can significantly aid in understanding complex logic.

- Header Analysis: Examining the file headers (e.g., PE headers for Windows executables) to understand file structure, sections, import/export tables, and compilation timestamps. Tools like `PEview` or `pestudio` are excellent for this.

Dynamic Analysis: Observing the Beast in Action

Dynamic analysis involves executing the malware in a controlled, isolated environment (a sandbox) to observe its behavior in real-time. This is where we see the theory put into practice.- Sandboxing: Running the malware within an isolated virtual machine or dedicated hardware that prevents it from affecting the host system or network. Tools like Cuckoo Sandbox, Any.Run, or even manual VM setups are crucial.

- Process Monitoring: Observing the creation, modification, and termination of processes. Tools like Process Explorer, Procmon (Process Monitor) from Sysinternals, or `ps` and `top` on Linux systems are essential.

- Network Traffic Analysis: Monitoring network connections, DNS requests, HTTP/S traffic, and data exfiltration attempts. Wireshark is indispensable for this, coupled with tools like `tcpdump`.

- Registry Monitoring: Tracking changes made to the Windows Registry, which malware often uses for persistence or configuration. Procmon is excellent for this.

- File System Monitoring: Observing file creation, deletion, modification, and encryption activities.

- Memory Forensics: Analyzing the contents of system memory (RAM) when the malware is running. This can reveal unpacked code, encrypted strings, or hidden processes missed by disk-based analysis. Tools like Volatility are paramount for memory analysis.

Evasion Techniques: Outsmarting the Analyst

The most sophisticated malware doesn't just attack systems; it actively tries to evade detection and analysis. Understanding these tricks is vital for defenders to adapt their methods.- Anti-Disassembly: Techniques to confuse disassemblers, making static analysis more difficult.

- Anti-Debugging: Code that detects the presence of a debugger and alters its behavior or terminates execution.

- Anti-VM/Sandbox Detection: Malware that checks if it's running in a virtualized environment and may alter its behavior or refuse to execute. Look for checks on CPU features, hardware IDs, or specific registry keys.

- Code Obfuscation: Techniques to make the code harder for humans to read and understand, such as encrypting strings, using junk code, or employing complex control flow.

- Packing/Encryption: Compressing or encrypting the malware's payload, which is only unpacked in memory during execution. This means the malicious code isn't directly visible in the initial file.

- Time-Based Execution: Malware designed to execute only after a certain date or time, or after a specific number of reboots, to avoid detection during initial analysis.

Countermeasures: Building the Digital Fortress

Armed with the knowledge of malware types, analysis techniques, and evasion tactics, we can now focus on building robust defenses.Defensive Strategies and Tools

- Endpoint Detection and Response (EDR): Advanced security solutions that go beyond traditional antivirus by continuously monitoring endpoints for suspicious activity, providing real-time threat detection, and enabling rapid response.

- Network Intrusion Detection/Prevention Systems (NIDS/NIPS): Monitor network traffic for malicious patterns and can alert or actively block threats.

- Security Information and Event Management (SIEM): Collects and analyzes security logs from various sources across the network, providing a centralized view of security events and enabling correlation for threat detection.

- Threat Intelligence Platforms (TIPs): Aggregate and analyze threat data from multiple sources to provide actionable intelligence on emerging threats, indicators of compromise (IoCs), and attacker tactics.

- Regular Patching and Updates: A fundamental defense against malware that exploits known vulnerabilities. Keeping operating systems and applications up-to-date is non-negotiable.

- Principle of Least Privilege: Granting users and processes only the permissions necessary to perform their functions. This limits the damage malware can inflict if a compromised account is used.

- User Education: Training users to recognize phishing attempts, avoid suspicious links and downloads, and practice safe computing habits. Many infections start with a single click.

Veredicto del Ingeniero: The Analyst's Imperative

Malware analysis is not merely an academic pursuit for incident responders or security researchers; it is a critical component of any robust cybersecurity strategy. For the defender, understanding *how* an attack works is paramount to building defenses that can withstand it. Static and dynamic analysis are two sides of the same coin, each providing essential insights. Static analysis reveals the blueprint, the intended functionality, while dynamic analysis shows you the chaos it can unleash. The effectiveness of your defense hinges on your ability to anticipate the attacker's moves. By dissecting malware, you gain the intelligence needed to craft better detection rules, more effective isolation strategies, and ultimately, a more resilient security posture. Ignoring malware analysis is akin to fighting an unseen enemy in the dark – you're already at a disadvantage.Arsenal del Operador/Analista

For those serious about diving deep into the digital abyss, the right tools are indispensable. This is not about theoretical knowledge; it's about practical application.- For Static Analysis: IDA Pro (industry standard, commercial), Ghidra (free, powerful, NSA-developed), radare2 (open-source, powerful command-line framework), pestudio (malware info tool).

- For Dynamic Analysis: Cuckoo Sandbox (open-source automated sandbox), Any.Run (cloud-based interactive sandbox), Sysinternals Suite (Procmon, Process Explorer - essential Windows utilities), Wireshark (network protocol analyzer), Volatility Framework (memory forensics).

- Operating Systems: Dedicated analysis VMs running Windows (with specific versions required by malware) and Linux (e.g., REMnux or Kali Linux).

- Books: "Practical Malware Analysis" by Michael Sikorski and Andrew Honig, "The Art of Memory Forensics" by Michael Hale Ligh et al.

- Certifications: GIAC Certified Forensic Analyst (GCFA), Certified Reverse Engineering Malware (CRME), Offensive Security Certified Professional (OSCP) provides foundational understanding of exploit vectors.

Taller Práctico: Fortaleciendo tu Entorno de Análisis

This practical guide focuses on setting up a safe and effective environment for dynamic malware analysis.- Isolate your Network: Create a dedicated, air-gapped network for your analysis VMs. If internet access is required for observation (e.g., C2 communication), use a host-only network with a transparent proxy (like Burp Suite or OWASP ZAP) and DNS sinkholing for suspicious domains. Never connect analysis VMs directly to your production or home network.

- Prepare your VM: Install a clean, fully patched operating system (e.g., Windows 7 or 10, depending on malware targets). Install essential analysis tools before taking a snapshot. Avoid installing common security software (like mainstream AV) that malware might detect.

-

Install Analysis Tools:

- Sysinternals Suite (Procmon, Process Explorer)

- Wireshark

- Registry viewers

- A good text editor or hex editor

- Potentially debuggers and disassemblers if not using a dedicated analysis OS.

- Configure Snapshots: Take a clean snapshot of your VM *before* introducing any malware. This allows you to revert to a pristine state quickly after each analysis session. Always analyze the malware in a clean environment.

- Utilize a Proxy/Sinkhole: For observing network traffic related to command and control (C2) servers, set up a transparent proxy. Tools like `dnscat2` or `iodine` can also be used for more advanced network analysis and tunneling. For basic DNS sinkholing, you can redirect suspicious domains to a local IP.

- Monitor System Changes: Configure Procmon to log file system, registry, and process/thread activity. Filter aggressively to capture relevant events without overwhelming the log.

- Capture Memory Dumps: If dynamic analysis is complete or the malware exhibits complex memory-resident behavior, capture a memory dump using tools like `dumpit` or within your VM environment. Analyze this dump later with Volatility.

Preguntas Frecuentes

- What's the difference between static and dynamic malware analysis? Static analysis examines malware without running it, like reading a blueprint. Dynamic analysis involves executing the malware in a controlled environment to observe its real-time behavior. Both are crucial for a comprehensive understanding.

- Is it safe to analyze malware on my own computer? Absolutely not. Malware analysis must be performed in a highly isolated environment, such as a dedicated virtual machine with no network connectivity or a carefully configured sandbox, to prevent infection.

- What are the essential tools for a beginner malware analyst? For beginners, the Sysinternals Suite (Procmon, Process Explorer), Wireshark, and a good disassembler like Ghidra are excellent starting points for exploring malware behavior.

- How can I stay updated on new malware threats? Follow reputable threat intelligence feeds, security news outlets, and cybersecurity researchers on platforms like Twitter and LinkedIn. Subscribing to security advisories from vendors and government agencies is also beneficial.

El Contrato: Your First Reconnaissance Mission

You've been handed a suspicious executable file. Your mission, should you choose to accept it, is to perform initial reconnaissance. 1. **Calculate the SHA-256 hash** of the file. 2. **Use the `strings` command** to extract readable text from the binary. 3. **Analyze the output for any suspicious URLs, IP addresses, file paths, or unusual commands.** 4. **Document your findings** in a brief report, noting any potential indicators of compromise (IoCs) without executing the file. This is your first step into the world of threat hunting and analysis. The digital world is a labyrinth. Understand its dangers, and you can navigate it safely.For more hacking info and free hacking tutorials | YouTube | Twitter | Discord